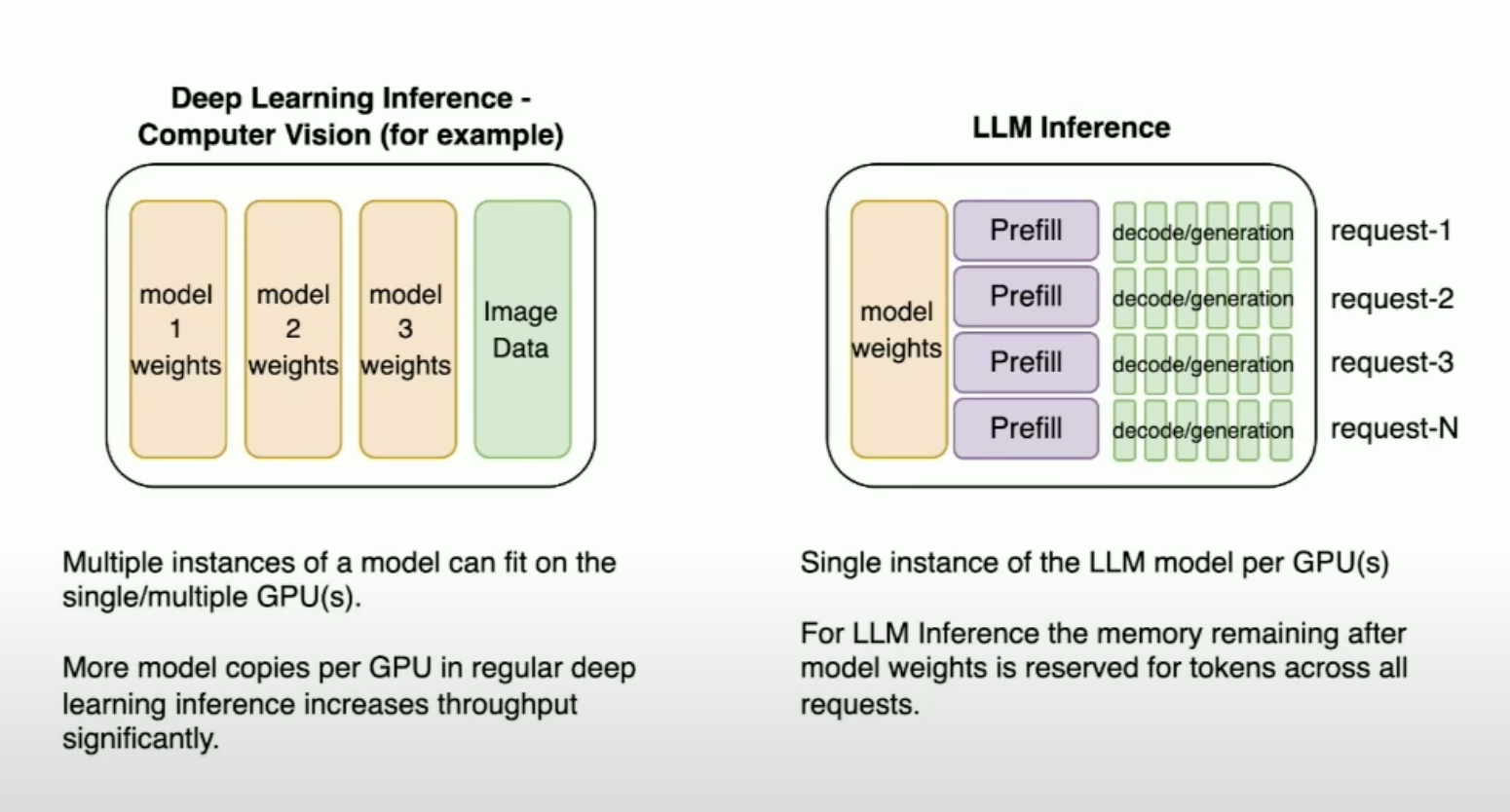

Prefill vs generation, compute bound vs memory bound

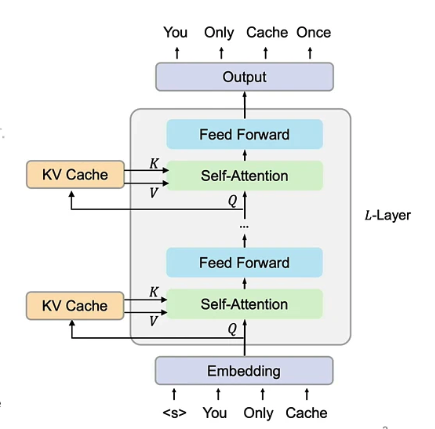

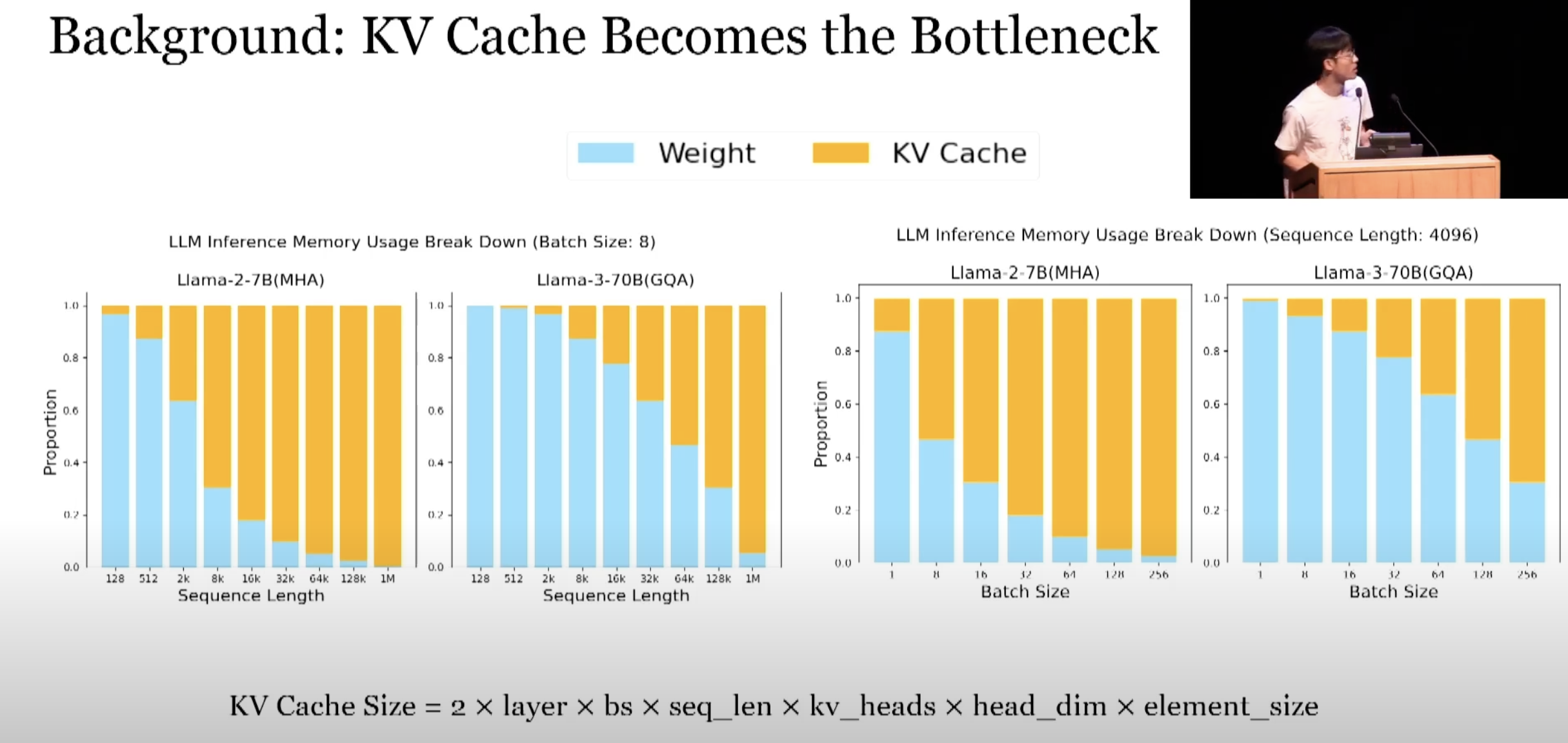

KV Cache

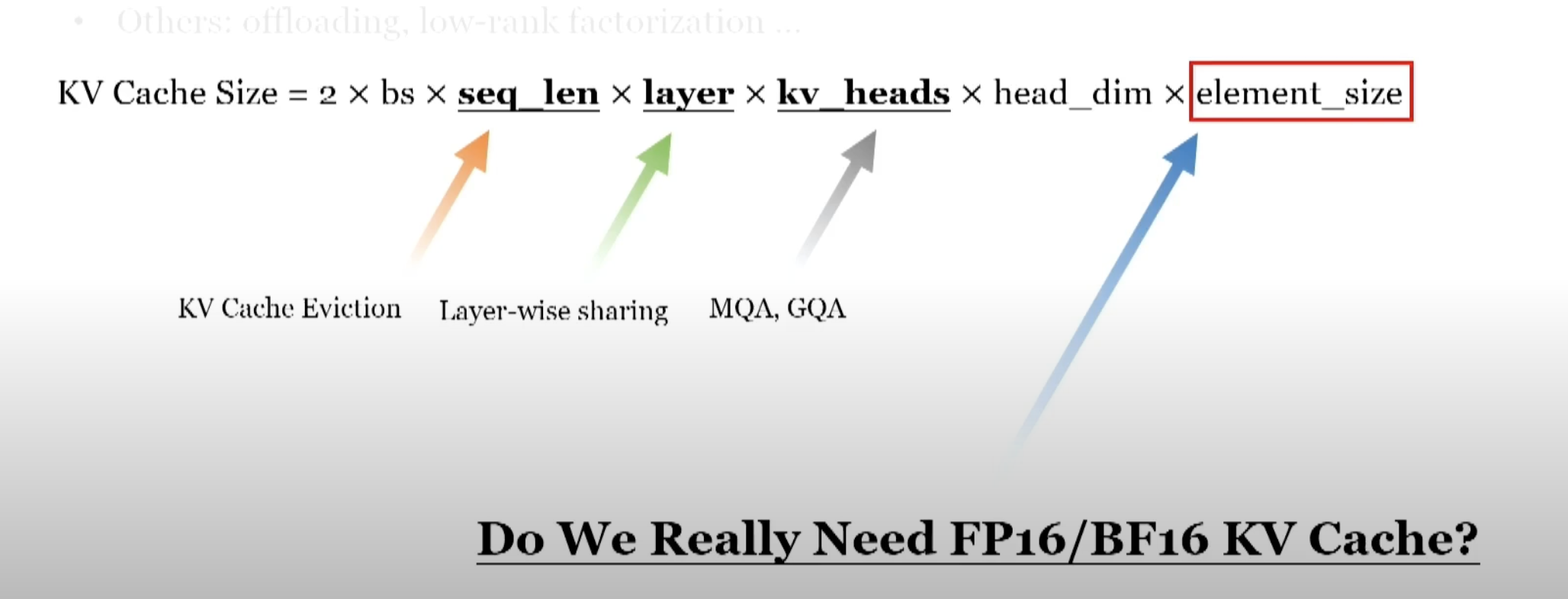

Grouped Query Attention

Less Keys and Values, to reduce Key Value Cache

Paged Attention

Avoid padding out samples with fixed size blocks, use proper paging, split up samples across smaller pages

Sliding Window Attention ⇒ Rolling Buffer KV Cache

- Exploring the Latency/Throughput & Cost Space for LLM Inference // Timothée Lacroix // CTO Mistral - YouTube

- SKVQ: Sliding-window Key and Value Cache Quantization for Large Language Models - YouTube

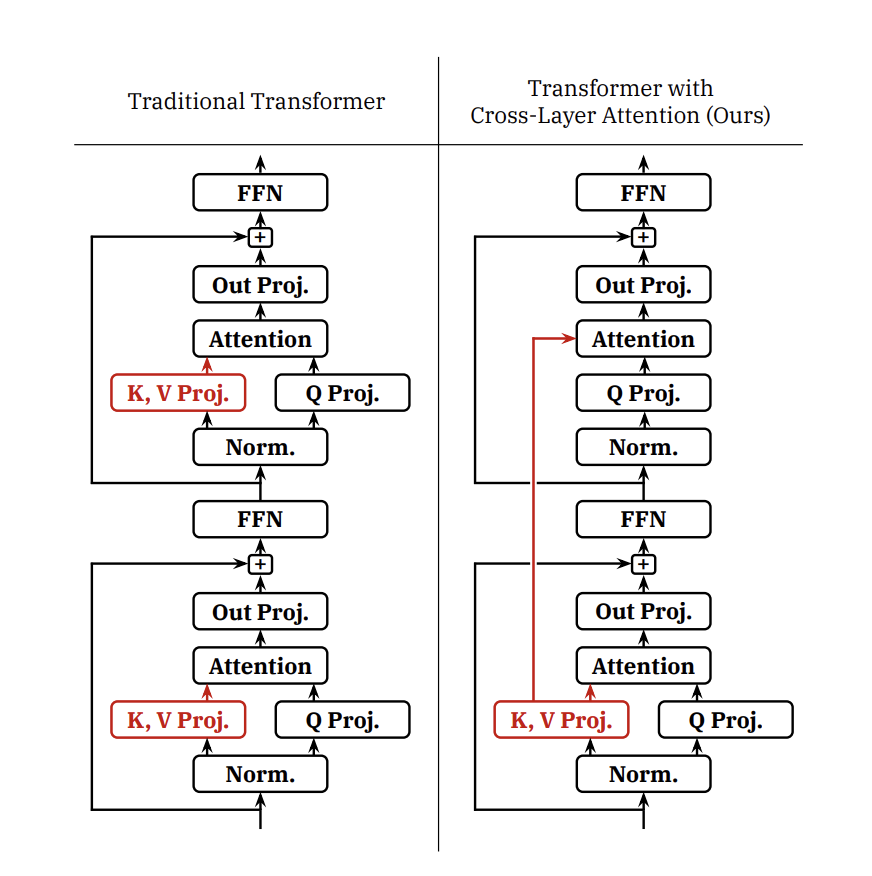

Cross Layer KV Cache Sharing

[2405.05254] You Only Cache Once: Decoder-Decoder Architectures for Language Models

StreamingLLM

Prompt / Prefix Caching

Cache KV cache for common prefixes

Low Precision and Compression

Use bf16, float8, int8 or smaller dtypes like int4 to save memory

Other

Speculative Decoding

- Dynamic Speculative Decoding

- How Speculative Decoding Boosts vLLM Performance by up to 2.8x | vLLM Blog

- [2403.09919] Recurrent Drafter for Fast Speculative Decoding in Large Language Models

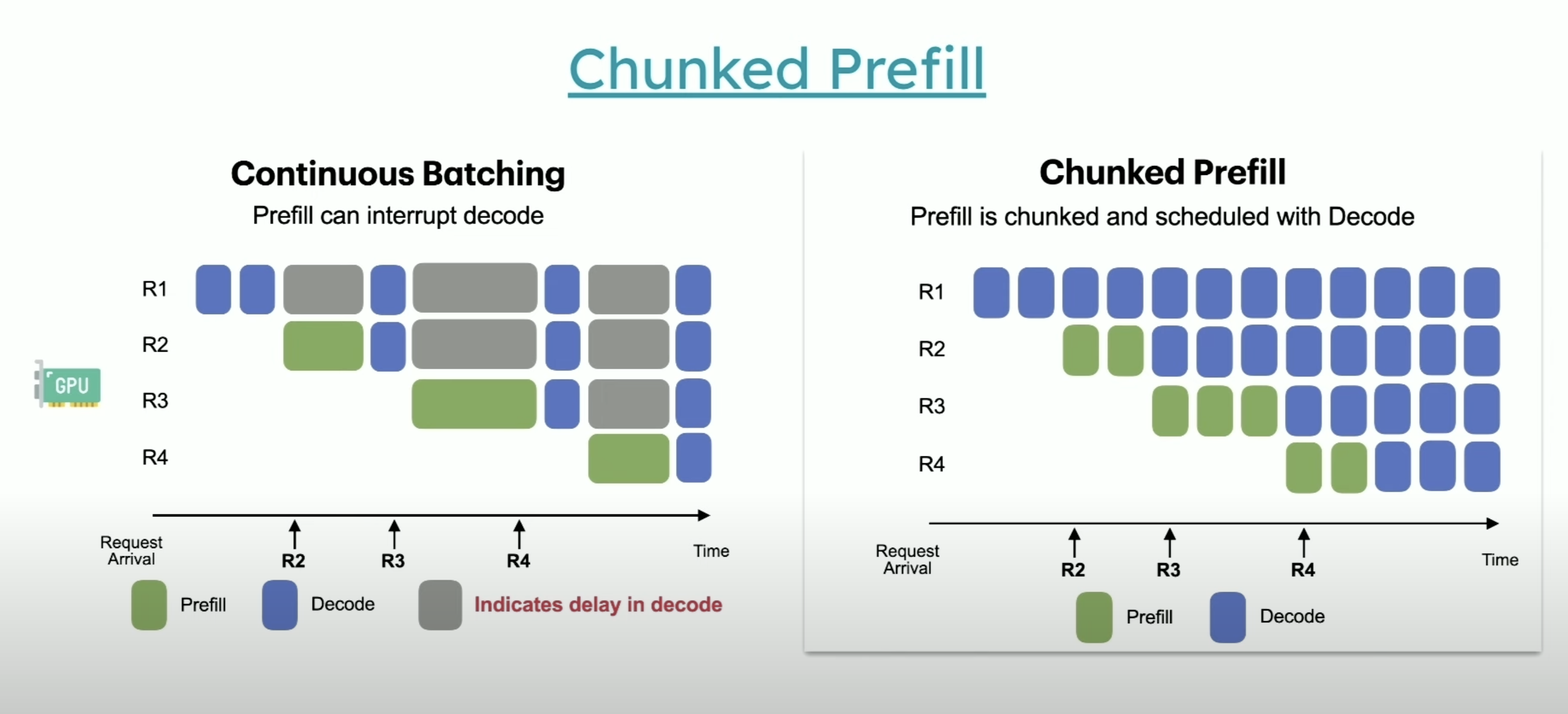

Chunked Prefill (for batched inference)

Split up the prefill compute into chunks to reduce the units of work so that large prefills do not interfere with other request doing generation in the same batch

Disaggregated Prefill and Decoding

Do prefill on larger GPU then decode on smaller cheaper GPUs

- [2401.09670] DistServe: Disaggregating Prefill and Decoding for Goodput-optimized Large Language Model Serving

- DistServe: disaggregating prefill and decoding for goodput-optimized LLM inference - YouTube

Prefix Caching

RadixAttention

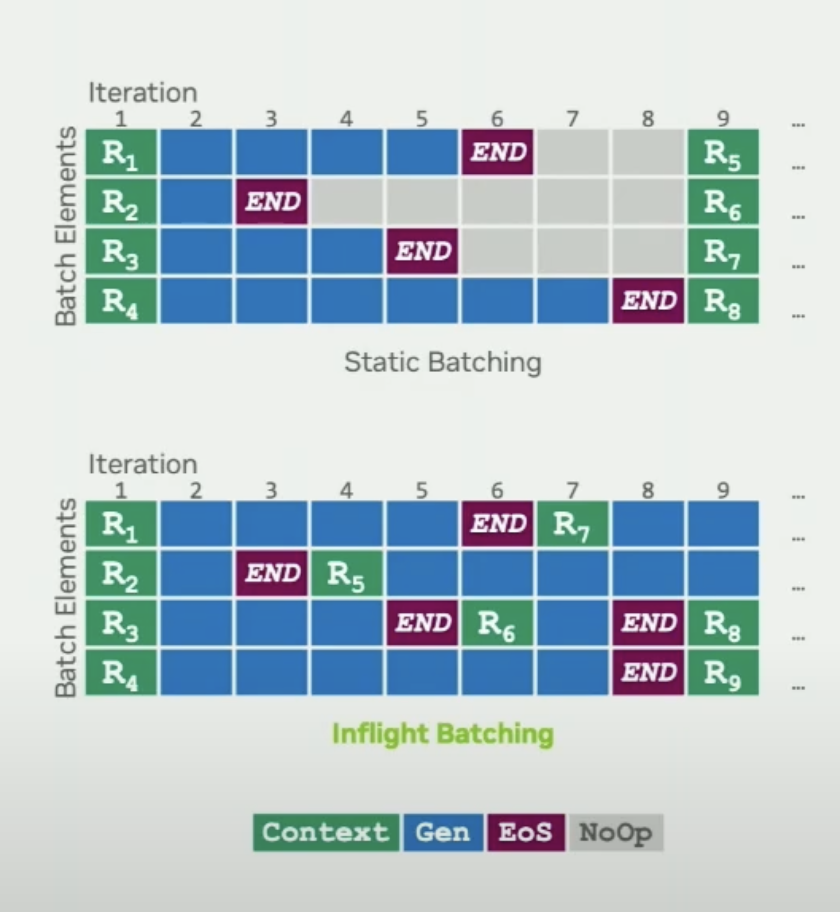

Continuous Batching / Inflight Batching

Flash Attention

Flash Decoding

Mixture of Experts

Reduce compute, skipping experts

Quantization

Structured Decoding / Generation

LLM Routing

Predict best (speed / cost / expert) model for given request and route based on that

Metrics

Time to First Token

Inter Token Latency

Links

- Understanding the LLM Inference Workload - Mark Moyou, NVIDIA - YouTube

- Mastering LLM Techniques: Inference Optimization | NVIDIA Technical Blog

- Optimizing AI Inference at Character.AI

- [2402.16363] LLM Inference Unveiled: Survey and Roofline Model Insights

- [2407.12391] LLM Inference Serving: Survey of Recent Advances and Opportunities

- Optimizing AI Inference at Character.AI (Part Deux)