2024-08-26

Papers

LLM distillation

-

[2408.11796] LLM Pruning and Distillation in Practice: The Minitron Approach

-

to SSMs

-

[2408.15664] Auxiliary-Loss-Free Load Balancing Strategy for Mixture-of-Experts

Multi Modal Models

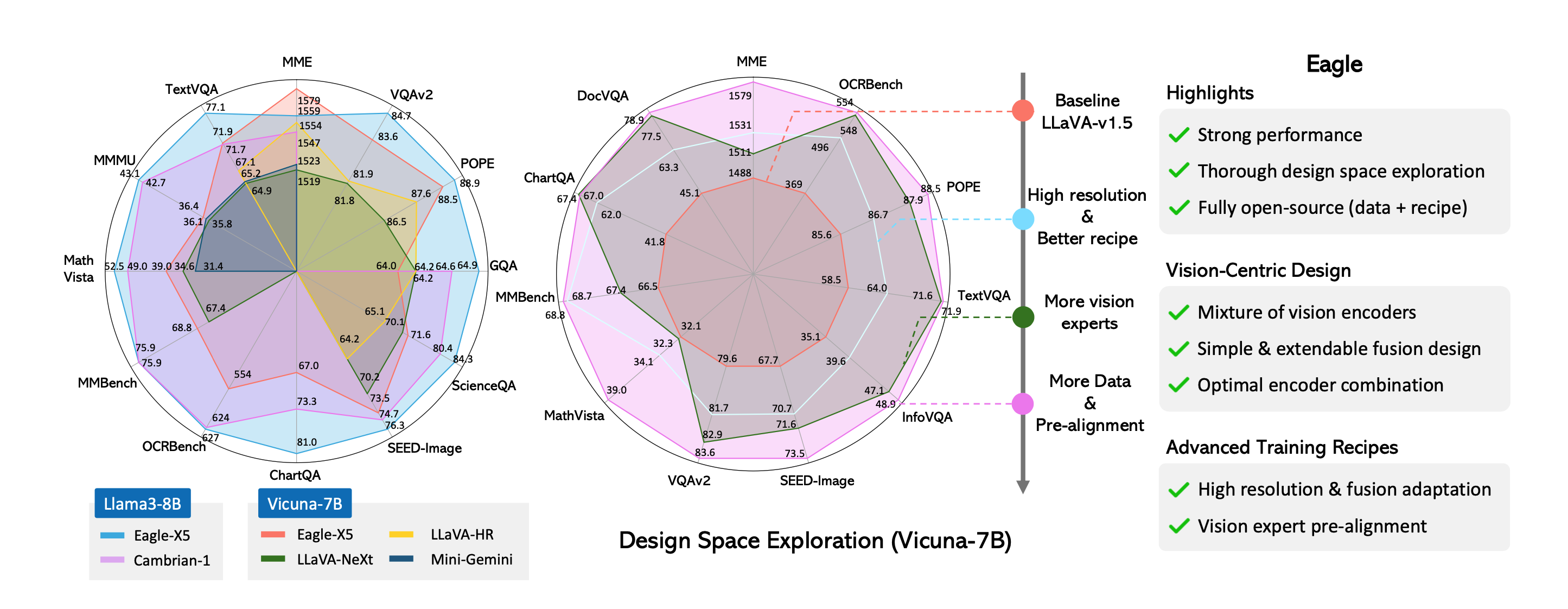

- [2408.15998] Eagle: Exploring The Design Space for Multimodal LLMs with Mixture of Encoders #vlm

- LLaVA-OneVision: Easy Visual Task Transfer

- Qwen2-VL: To See the World More Clearly | Qwen

- GitHub - QwenLM/Qwen2-VL: Qwen2-VL is the multimodal large language model series developed by Qwen team, Alibaba Cloud.

- Binyuan Hui on X: “Try our new Qwen2-VL: https://t.co/uyIeCOtRUa ⚠️ Three Secrets of Success for Qwen2-VL ⚠️ 1️⃣ A key architectural improvement in Qwen2-VL is the implementation of Naive Dynamic Resolution support. Unlike its predecessor, Qwen2-VL can handle arbitrary image resolutions, mapping https://t.co/QtokQgJcqT” / X

- [2408.16500] CogVLM2: Visual Language Models for Image and Video Understanding

Code

Datasets

Articles

- Evaluating the Effectiveness of LLM-Evaluators (aka LLM-as-Judge)

- LLM Evaluation doesn’t need to be complicated

Videos

- Neural and Non-Neural AI, Reasoning, Transformers, and LSTMs - YouTube

- The Mamba in the Llama: Distilling and Accelerating Hybrid Models - YouTube

- Stanford CS229 I Machine Learning I Building Large Language Models (LLMs) - YouTube

- Arvind Narayanan: AI Scaling Myths, The Core Bottlenecks in AI Today & The Future of Models | E1195 - YouTube

- Anthropic CEO Dario Amodei on AI’s Moat, Risk, and SB 1047 - YouTube

Tweets

- [ ]