2025-01-06

Models

- microsoft/phi-4 · Hugging Face

- GitHub - NovaSky-AI/SkyThought: Sky-T1: Train your own O1 preview model within $450

Papers

- [2412.10849] Superhuman performance of a large language model on the reasoning tasks of a physician

- [2501.02497] Test-time Computing: from System-1 Thinking to System-2 Thinking

- [2501.03120] CAT: Content-Adaptive Image Tokenization

- Cosmos World Foundation Model Platform for Physical AI

- [2410.10733] Deep Compression Autoencoder for Efficient High-Resolution Diffusion Models

- CheXagent: Towards a Foundation Model for Chest X-Ray Interpretation

- Accurate predictions on small data with a tabular foundation model | Nature

- [2501.04001] Sa2VA: Marrying SAM2 with LLaVA for Dense Grounded Understanding of Images and Videos

- [2501.03895] LLaVA-Mini: Efficient Image and Video Large Multimodal Models with One Vision Token

- [2501.04322] Eve: Efficient Multimodal Vision Language Models with Elastic Visual Experts

- [2312.13789] TinySAM: Pushing the Envelope for Efficient Segment Anything Model

- [2501.04568] Supervision-free Vision-Language Alignment

- [2501.04686] URSA: Understanding and Verifying Chain-of-thought Reasoning in Multimodal Mathematics

- [2501.04682] Towards System 2 Reasoning in LLMs: Learning How to Think With Meta Chain-of-Though

- [2501.04519] rStar-Math: Small LLMs Can Master Math Reasoning with Self-Evolved Deep Thinking

- [2501.04155] MM-GEN: Enhancing Task Performance Through Targeted Multimodal Data Curation

- [2501.03078] Qinco2: Vector Compression and Search with Improved Implicit Neural Codebooks

- [2501.04699] EditAR: Unified Conditional Generation with Autoregressive Models

- [2501.05453] An Empirical Study of Autoregressive Pre-training from Videos

Code

- GitHub - NVIDIA/Cosmos: Cosmos is a world model development platform that consists of world foundation models, tokenizers and video processing pipeline to accelerate the development of Physical AI at Robotics & AV labs. Cosmos is purpose built for physical AI. The Cosmos repository will enable end users to run the Cosmos models, run inference scripts and generate videos.

- GitHub - hhhuang/CAG: Cache-Augmented Generation

- GitHub - PriorLabs/TabPFN: ⚡ TabPFN: Foundation Model for Tabular Data ⚡

- GitHub - xinghaochen/TinySAM: [AAAI 2025] Official PyTorch implementation of “TinySAM: Pushing the Envelope for Efficient Segment Anything Model”

- GitHub - cloneofsimo/minRF: Minimal implementation of scalable rectified flow transformers, based on SD3’s approach

- GitHub - nreHieW/minVAR: Minimal Implementation of Visual Autoregressive Modelling (VAR)

- GitHub - SonyResearch/micro_diffusion: Official repository for our work on micro-budget training of large-scale diffusion models.

Articles

- [Distributed w/ TorchTitan] Breaking Barriers: Training Long Context LLMs with 1M Sequence Length in PyTorch Using Context Parallel - distributed / torchtitan - PyTorch Forums

- Weighted Skip Connections are Not Harmful for Deep Nets

- Sky-T1: Train your own O1 preview model within $450

Videos

- Flash LLM - Sasha Rush LLM Training Short Series - YouTube

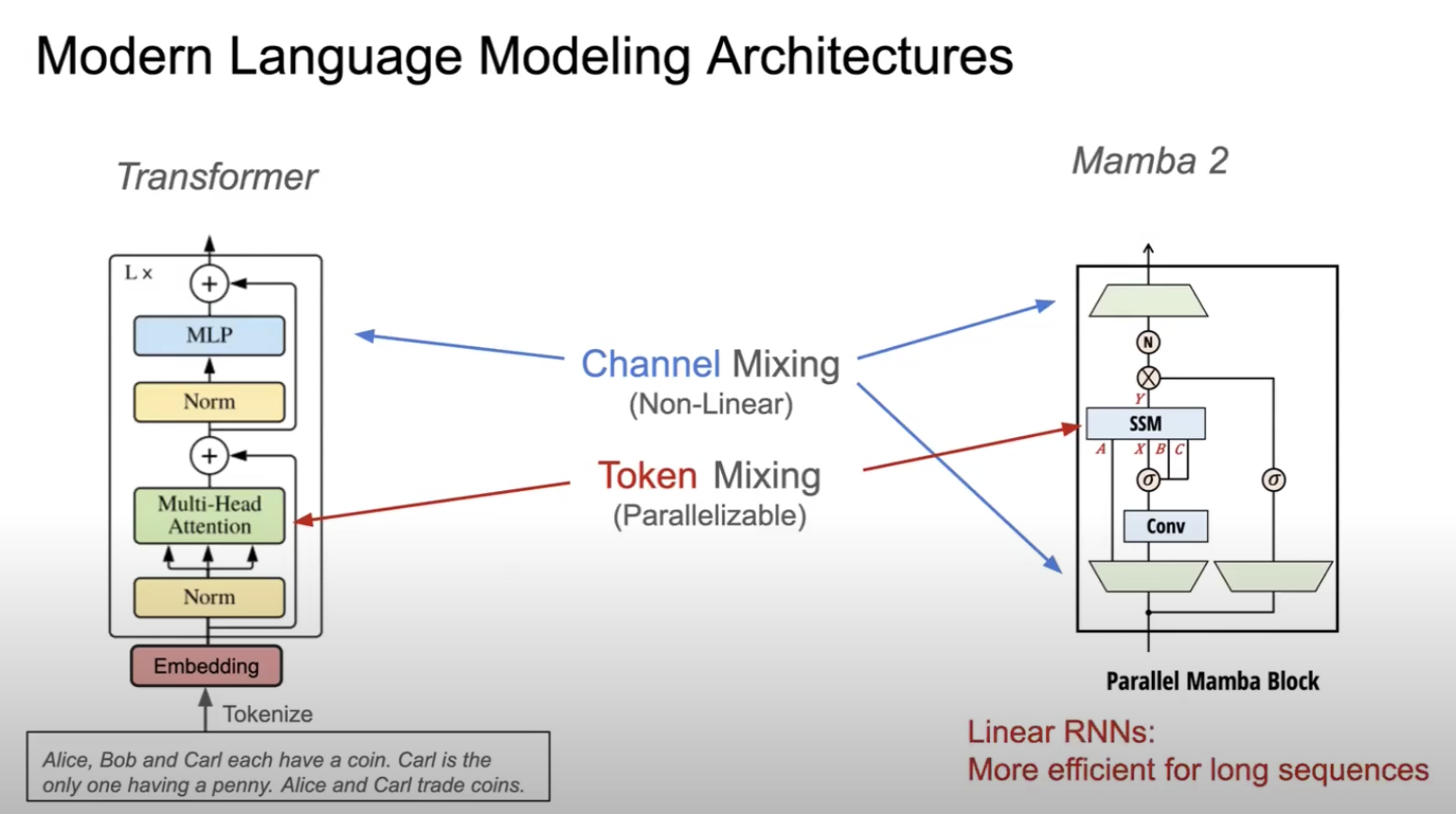

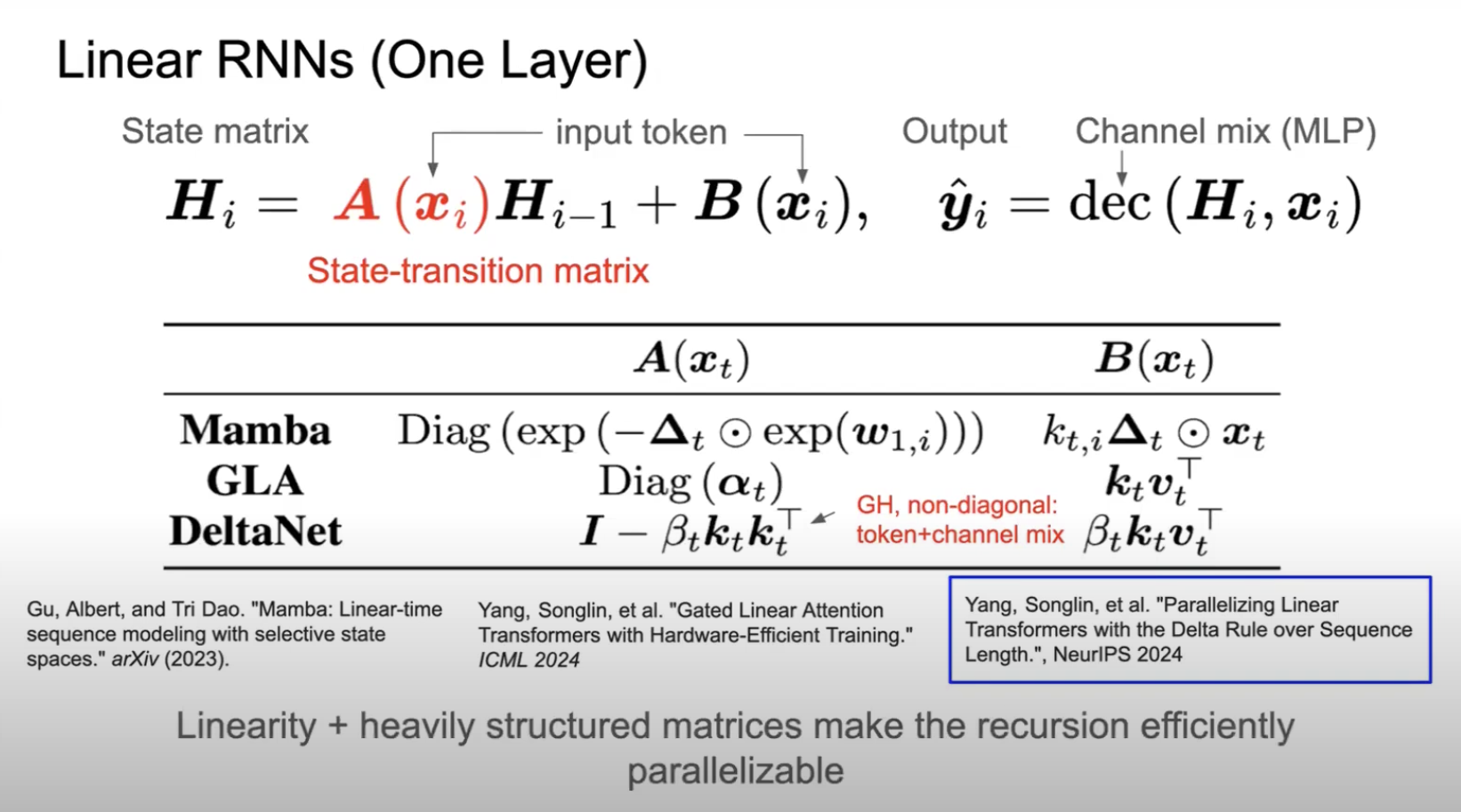

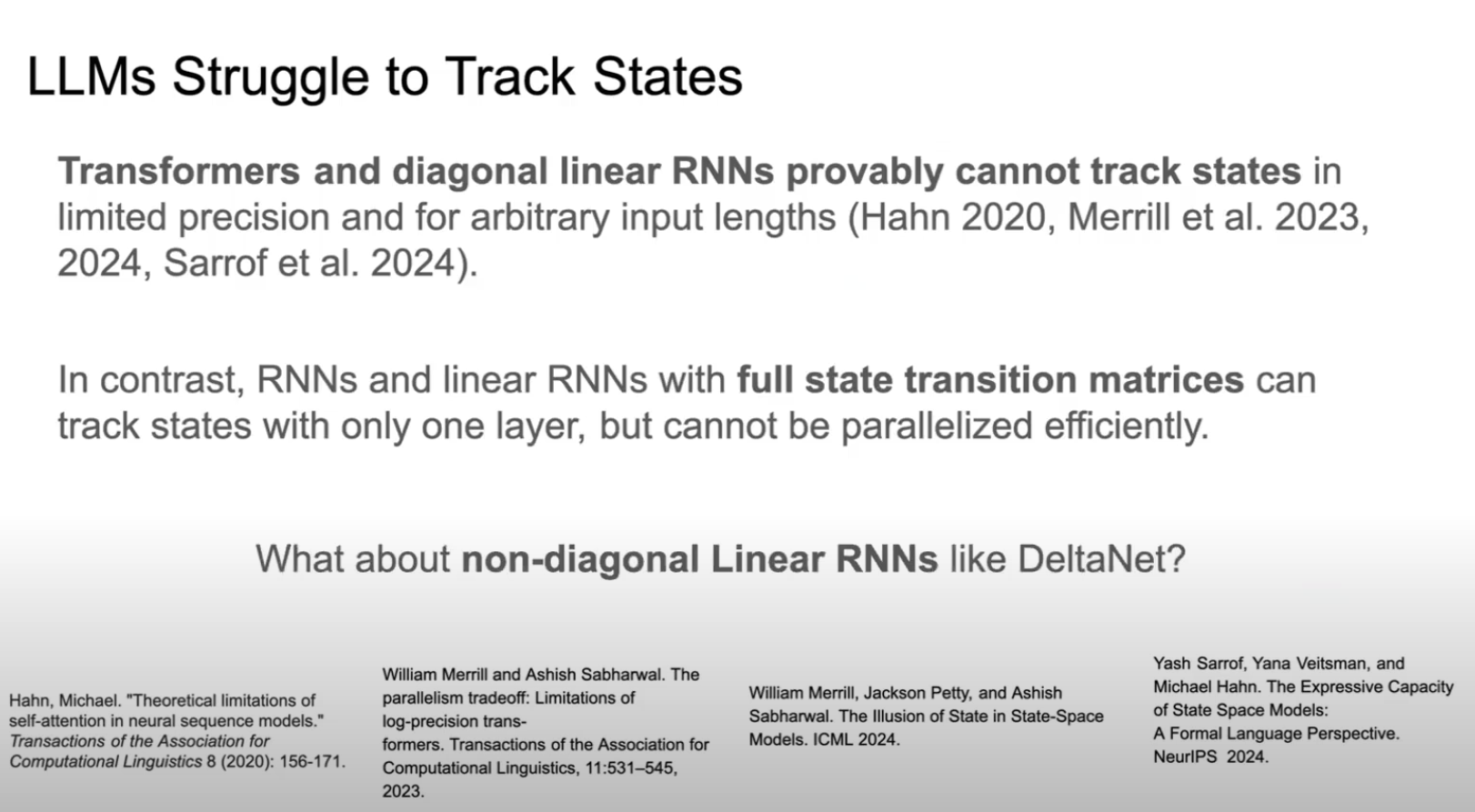

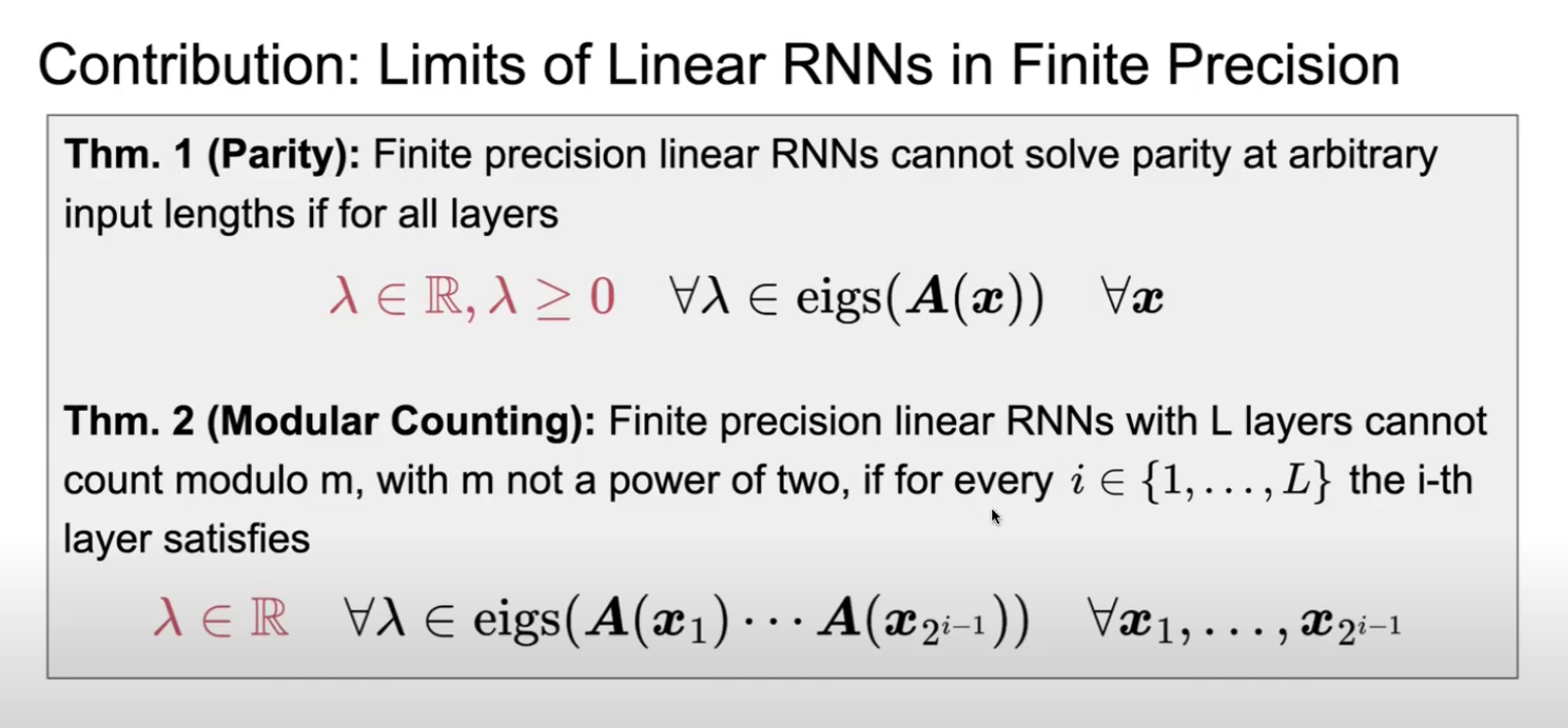

- Unlocking State-Tracking in Linear RNNs Through Negative Eigenvalues - YouTube

- ML Scalability & Performance Reading Group Session 4: Ring Attention - YouTube

Other

- [ ]

Tweets